I’m approaching the end of a graduate program in Computer Science so the recent breakthroughs in generative artificial intelligence have been of great interest to me. More than once, I’ve been humbled by the ability of tools like ChatGPT or Bard to spit out code in seconds that would have taken me (an admittedly average programmer) hours to produce. I believe that these systems will eventually take over many of the tasks programmers spend their time on today.

But there is a great deal of anxiety in the field as many people simply don’t trust AI systems to do the “right” thing when left to their own devices. For example, intelligent agents – programmatic AI entities developed to perceive their environment, make decisions, and take appropriate action – will often do exactly what we tell them to, but not exactly what we mean them to. In Computer Science, this is referred to as “the alignment problem.” One of my favorite examples is from Brian Christian’s wonderful book of the same name. He describes a team of researchers at OpenAI who, in 2016, set about creating an intelligent agent that could play a boat racing video game called CoastRunners. When the researchers checked in on the agent, which programmed itself using reinforcement learning, they found surprising behavior. Rather than attempt to finish the race first, the AI simply directed its boat to make continuous circles in a specific lagoon with power ups that rewarded it with generous point totals. The agent had done what the researchers told it to (score as many points as possible), but not what they meant it to (win the race). They had designed the agent’s reward system poorly and gotten slapstick results. It’s easy to imagine a world, however, where the results wouldn’t be so amusing (think paperclips).

Alignment problems aren’t exclusive to the world of artificial intelligence. They are everywhere in human society, where we frequently delegate tasks to other people (or groups) in the hopes that they will achieve them as we would. The problem, of course, is that getting humans aligned with one another is no less tricky.

-James Coleman

The most clicked link from last week's issue (~7% of opens) was Blocklayer, a website with dozens of calculator widgets for construction projects. In the Members' Slack, a conversation about dumb TVs in the #community-lunch chat led Eric to share an impassioned argument in favor of using a commercial display for digital signage instead of a TV – they have no ads, no tracking, and can be rated for 24/7 operation if you really need to binge that new show.

MAKING & MANUFACTURING.

Economists sometimes refer to the problem I’m describing as Goodhart’s law, which is stated succinctly as “when a measure becomes a target, it ceases to be a good measure.” People, like boat racing AI agents, can game the metrics we use to evaluate them.

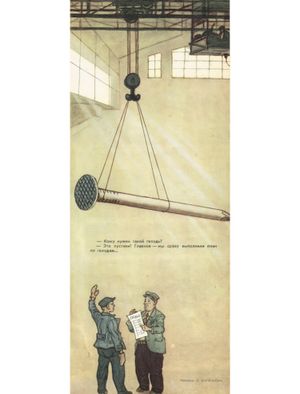

The canonical example of this law comes from nail manufacturing in the former Soviet Union. The story goes that plant managers, realizing they were only evaluated on the quantity of the nails they produced, began to make large numbers of small, useless nails. Central planners caught on to this trick and switched to a system where plants were evaluated exclusively on the weight of what they produced. Ever dynamic, the managers then began to make incredibly large and heavy, but useless nails.

The story is likely apocryphal: The example comes from a 1960s political cartoon in the Soviet satirical magazine Krokodil (see header image). Though the nail factory likely never existed, the cartoonist was poking fun at a real phenomenon. A factory in Tambov (Central Russia) that produced machines for vulcanizing tires made a similar decision to fixate on a measurement in the late 1960s. A significant revision to the plant’s manufacturing line was proposed that would have increased speed, saved labor, and reduced the weight of the resulting vulcanizing machines. Plant managers chose not to make the change because industrial equipment was sold by weight and the new machines would have generated less revenue and, consequently, incentive funds from the central government.

DISTRIBUTION & LOGISTICS.

One solution to Goodhart’s law is to design rewards and targets more carefully. With the Tambov factory, for example, it might have been better to price the vulcanizing machines based on their capability, rather than the simple proxy of weight. Even with the apocryphal nail factory, evaluating the plant on the production of a standardized nail spec would have done the trick.

But our objectives are often nuanced and complex, and organizations struggle to even agree on what they should be. Even when we do manage to get on the same page, it's not always straightforward to design incentives that will encourage the right behavior. How, for example, do you design a set of targets for being a good employee, a good employer, or even an informative newsletter?

One way is to think differently about how you communicate objectives. I once worked as a manager at an industrial parts distributor that shipped out thousands of orders per day. As you might expect, there were productivity and quality expectations for each role in the operation, from the people who pulled items from the shelves to the people who loaded completed orders onto trucks. It was a constant challenge to convey the company’s high expectations to workers, and we thought a lot about Goodhart’s law and the danger of turning measures into targets.

As a principle, managers rarely spelled out exact targets because we worried that people would fixate on the numbers, in lieu of safe and effective work. We also worried that the numbers might become a ceiling for performance, rather than the floor. To be sure, we had specific numeric expectations but didn’t broadcast them, at least not explicitly.

Instead, we coached workers on the specific steps in their process, shadowing and giving feedback on the different aspects of a worker’s routine. The idea was that if they had a good process, they would be productive and error free. “Don’t coach the batting average, coach the swing” was a frequent refrain and the company did quite well with this approach.

PLANNING & STRATEGY.

I got chills when I read about how Open AI thought about solving their alignment problems with the CoastRunners boat racing agent. In a postmortem, they recommended teaching and learning through observation:

Learning from demonstrations allows us to avoid specifying a reward directly and instead just learn to imitate how a human would complete the task. In this example, since the vast majority of humans would seek to complete the racecourse, our RL (reinforcement learning) algorithms would do the same.

In addition … we can also incorporate human feedback by evaluating the quality of the episodes ... It’s possible that a very small amount of evaluative feedback might have prevented this agent from going around in circles. [emphasis added]

The parts distributor I mentioned earlier was doing something quite similar. We avoided specifying productivity targets directly and instead gave feedback on the good or bad work behaviors we observed: “I noticed that you didn’t check the part number on the storage location against the part number on the order fulfillment document. I’ve seen other folks grab the wrong item when they skip this step.” By encouraging a behavior rather than a specific metric (make fewer than x errors, per y lines filled), the organization believed it got better outcomes.

I have to be honest and say that the people I worked with often found this overall management approach frustrating; I frequently heard complaints that it was unfair to be evaluated on a target you don’t know. These were very tough conversations for me because I agreed with the critique, while seeing the benefits of the approach.

The situations I’ve been discussing are also examples of the principal-agent problem, which arises when the priorities of a person or group (the principal) come into conflict with those of the person or group that acts on their behalf (the agent). Principal agent problems are most present when there is a discrepancy between the interests of the two parties or the information they share.

In the above examples, I’ve implicitly taken the perspective of the principal, but it’s worth considering whether or not that’s always the right thing to do. Would it, for example, be better to accept less efficiency or quality in warehouse operations (perhaps disappointing customers) in favor of a more predictable set of expectations for employees?

The classic solution to a principal-agent problem is closely aligning the incentives of the principal and agent. If everyone can agree that serving the customer well is the best approach, how can the reward structures for employees reflect that? Bonuses for exceptional quality and productivity? Profit sharing? The parts distributor I worked for actually did both of these things, but no system is perfect and there was still tension around expectations.

INSPECTION, TESTING & ANALYSIS.

I think the recent discourse about “returning to the office” is an example of a principal-agent problem. After several years of flexible workplace requirements as a result of the pandemic, many large companies are asking that their workers spend more time physically present at office locations. Even teleconference software provider Zoom has called people back to the office, at least part time. These new requirements are not without controversy.

Company leaders (the principals) believe that their businesses work better when workers are present physically, citing improved culture, better feedback, and more support for junior members of staff. Workers (the agents) argue that the decreased commute times, private work setups, and flexibility make them happier and more productive. There likely exists a point of equilibrium that achieves many of these aims, without leaving the principal or the agent completely satisfied.

These news stories reminded me of a surprising argument from Behemoth, A History of the Factory and the Making of the Modern World by Joshua Freeman. I had always believed that modern factories developed in England because of the advent of spinning and weaving machines at the dawn of the Industrial Revolution. Large machines, I reasoned, required lots of power, big buildings, and many workers to operate. Freeman presented an alternative view, namely that:

...centralizing gave manufacturers the ability to better supervise and coordinate labor, the work of many individuals who under the putting-out system would be supervising their own labor (and that of family members) in far flung domestic settings.

I suspect that if modern tech companies were being completely honest, their rationale for returning to work would sound quite similar to this. A lot has changed since the first cotton mills appeared in the 1800s, but the challenge of aligning principals and agents hasn’t. It is a struggle that we will take into the next age of work as well, whether the agents are human or artificial.

SCOPE CREEP.

Throughout this newsletter, I’ve drawn parallels between the way managers work with people and how researchers work with AI agents. Let me be clear: I don’t think we should treat people like AI, or that AI are people (at least not yet). My core point is that alignment is something that human beings have struggled with for a long time and there aren’t always simple or elegant solutions.

Thanks as always to Scope of Work’s Members and Supporters for making this newsletter possible. Thanks also to Brian, Sam, Jack, and Heath for providing the vocabulary and stories that unlocked this topic for me.

You Matter,

James

p.s. - We care about inclusivity. Here’s what we’re doing about it.